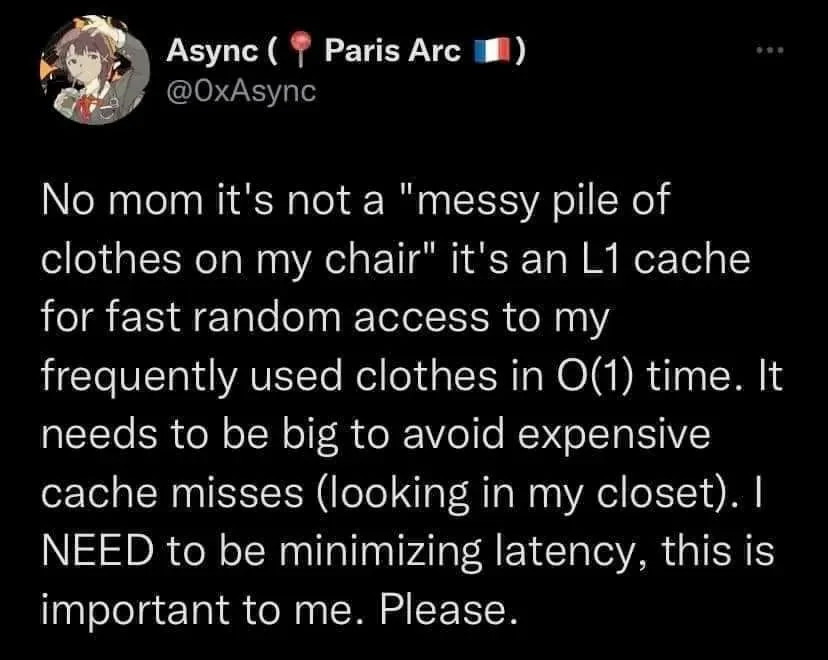

This tweet makes a lot more sense to me now after my computer architecture class. Not because I suddenly care about electrical engineering (I don’t), but because it captures something I wish I’d understood earlier: the most elegant algorithm in the world can perform terribly if it fights against the underlying hardware.

The Uncomfortable Truth About Performance

Nobody really tells you this when you’re learning to code, but you can write perfectly correct, beautifully structured code that runs like garbage. And it’s not because you’re a bad programmer. It’s because you’re ignoring the machine underneath.

I used to think performance was mostly about Big O notation. Linear time good, quadratic time bad, exponential time very bad. Clean, simple, mathematical. Then cache systems came along and made that seem incomplete.

Consider two ways to sum a 2D array:

// Method 1: Row by row

for (i = 0; i < N; i++)

for (j = 0; j < N; j++)

sum += array[i][j];

// Method 2: Column by column

for (j = 0; j < N; j++)

for (i = 0; i < N; i++)

sum += array[i][j];Same algorithm. Same Big O complexity. One can be 10x faster than the other. The difference is that Method 1 works with your cache, Method 2 fights it.

The Hardware Conspiracy You Never Knew About

Your computer is running a massive conspiracy behind your back, and it’s all about pretending memory is something it’s not.

When you write x = array[100], you think you’re fetching one number. Actually, your computer probably just grabbed 64 bytes (an entire cache line) including array[100] through array[115], betting that you’ll need the neighbors soon. If you immediately access array[101], you look like a genius. If you jump to array[5000], you just wasted a bunch of time and energy.

The memory hierarchy is basically a giant shell game:

- Registers: Instant access, but there are like 16 of them

- L1 Cache: ~1 cycle, ~32KB

- L2 Cache: ~10 cycles, ~256KB

- L3 Cache: ~40 cycles, ~8MB

- Main Memory: ~100+ cycles, several GB

- Storage: Thousands of cycles, basically infinite

Your processor is constantly making bets about what data to keep at each level, and when those bets are wrong, your code crawls.

The Optimizations You Never Asked For

What struck me wasn’t the electrical engineering details (still don’t care about transistors), but the sheer audacity of these optimizations. Your computer is making thousands of predictions per second about your program’s behavior:

Spatial locality: “They just accessed this byte, so I’ll grab the next 63 bytes too.” This is why processing arrays sequentially is fast and jumping around randomly is slow.

Temporal locality: “They just used this data, so I’ll keep it handy in case they need it again.” This is why that inner loop variable stays blazingly fast while the outer loop variable gets slower.

These predictions work. Modern caches achieve 90-95% hit rates. Your computer is guessing correctly about your next move 19 times out of 20, based purely on patterns in how programs typically behave.

When Beautiful Code Meets Ugly Reality

This is where things get philosophically interesting for me. I love clean, abstract code. I want to think in algorithms and data structures, not worry about whether my data is aligned on 64-byte boundaries. But ignoring hardware reality doesn’t make it go away - it just makes your code slower.

Consider sorting algorithms. Merge sort is theoretically elegant: clean O(n log n), divide and conquer, beautiful recursion. But quicksort often beats it in practice, partly because quicksort has better cache behavior - it works on smaller subarrays that fit in cache, while merge sort is constantly shuffling data between cache levels.

Or hash tables versus balanced trees. Hash tables should be O(1) vs O(log n) - no contest, right? But if your hash function causes cache misses on every lookup while the tree keeps everything in a few cache lines, suddenly that log factor doesn’t matter much.

The Skills They Don’t Teach in Algorithms Class

What my architecture class really showed me is that there’s a whole dimension of programming skill that CS curricula barely touch: understanding the machine you’re programming for.

It’s not about becoming a hardware engineer. It’s about developing intuition for questions like:

- Will this data access pattern play nice with caches?

- Am I accidentally creating false sharing between CPU cores?

- Does this data structure fit in the fast levels of memory?

- Am I making the branch predictor’s job impossible?

These aren’t academic curiosities. I’ve seen 2-line changes that doubled program performance, just because someone understood cache line alignment. I’ve seen perfectly good algorithms replaced with “worse” ones because the worse algorithm was cache-friendly.

The Bigger Pattern

This isn’t just about caches. It’s about a fundamental tension in computer science between beautiful abstractions and messy reality.

We want to think in terms of infinite, uniform memory where every access costs the same. Reality gives us a complex hierarchy where costs vary by 1000x depending on luck and access patterns.

We want to believe that algorithmic complexity tells the whole performance story. Reality says that constant factors, hardware quirks, and implementation details often matter more than Big O notation.

We want clean separation between software and hardware concerns. Reality says that the best programmers understand both layers and how they interact.

What I’m Taking Away

I still don’t want to design CPUs or debug circuit boards. But this deep dive into cache systems has changed how I think about writing code. Performance isn’t just about picking the right algorithm anymore - it’s about picking the right algorithm for the machine you’re running on.

The most valuable skill might not be memorizing more data structures, but developing intuition for how your code maps onto real hardware. Understanding that your beautiful, abstract program has to actually run on a specific chunk of silicon with specific quirks and limitations.

It’s humbling. All those years thinking I could ignore the hardware layer, that good software engineering was purely about logical correctness and clean abstractions. Turns out the machine gets a vote too.

Now I’m curious to dive deeper into systems programming, to understand how these hardware realities shape everything from database design to compiler optimizations. Because once you realize that elegant code isn’t enough - that you need to work with the machine, not just on it - a whole new dimension of programming opens up.